A measure has face-validity when people think that what is measured is indeed the case.

Researchers distinguish three kinds of validity: 1) face validity 2) construct validity and 3) criterion-validity.įace-validity refers to the extent to which a measure seems to measure what it should measure. External validity refers to the extent to which the research results can be generalized to other samples.If participants in different conditions differ systematically on more than only the independent variable, we are facing confounding. This causes namely that only the independent variable differs between the conditions.

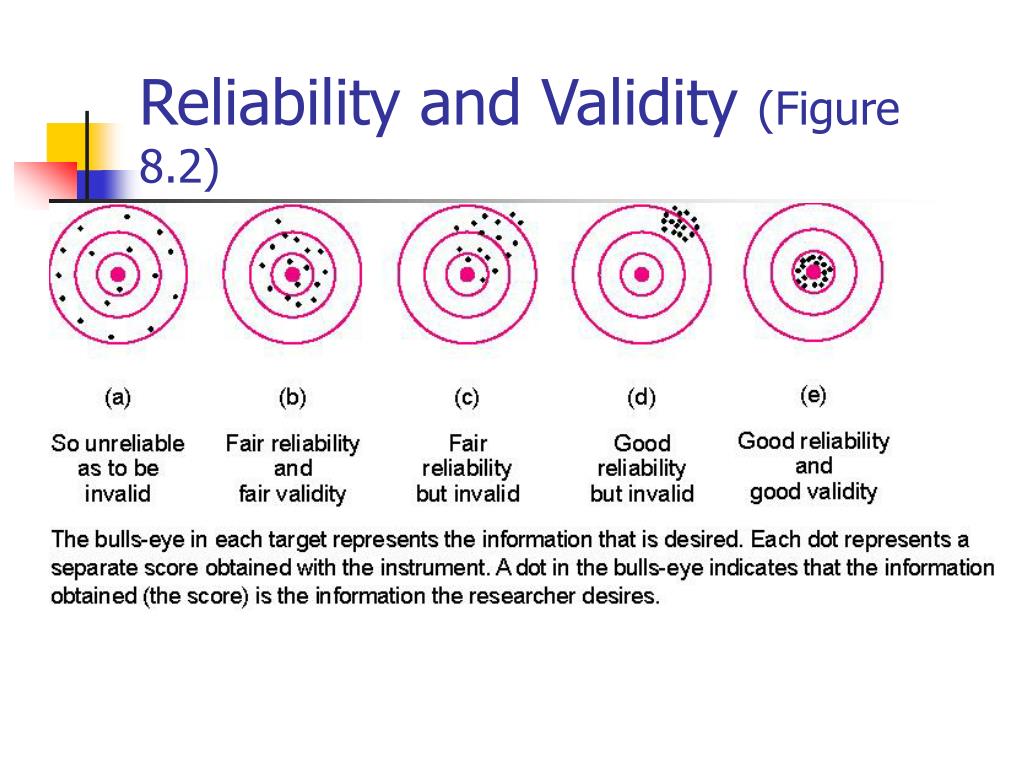

Internal validity is warranted by experimental control. Internal validity refers to drawing right conclusions about the effects of the independent variable.A measure can be valid for one aim, whilst not being valid for another aim.Ī subdivision is made into internal validity and external validity. Validity is not a definite characteristic of a measurement technique or instrument. To discover that, it is important to check the validity of the instrument. A high reliability tells us that the instrument measures something, but does not tell us exactly what the instrument measures. A measurement instrument can be reliable, whilst not being valid. It is important to note that reliability and validity are two different things. The question is thus whether we measure what we want to measure. Validity refers to the extent to which a measurement technique measures what it should measure.

Measurement techniques should not only be reliable, but also valid. When the observers make similar judgements (thus, a high inter-rater reliability), the correlation between their judgements should be. It refers to the extent to which two or more observers observe and code the behavior of participants equally. Inter-rater reliability is also called ‘ inter-judge’ or ‘inter-observer’ reliability.

Back to top Reliability as systematic variance

0 kommentar(er)

0 kommentar(er)